Introduction

With the release of APEX 24.1, Generative AI has come to APEX. Several new AI features are immediately useful to anyone looking to incorporate Generative AI into their APEX Apps. More importantly, it represents a statement of intent that Oracle plans bigger things for APEX in the AI space. This can only be good news for us developers and our users.

This post will focus on the new APEX_AI PL/SQL API and the Open AI Assistant Dynamic Action. I will describe how I use the APEX_AI API to classify blog posts on my APEX Developer Blogs website and how to use the Open AI Assistant Dynamic Action to ask questions about blog posts.

Use Case

The APEX Developer Blogs site has an APEX Automation, which runs every evening to capture new posts for registered blogs. Because people don't always blog about the same thing, I don't publish all the blogs that get posted. The Automation brings the posts into a holding table to be assessed for relevance to APEX Developers. Before AI, this meant manually scoring each blog post. I score each post on a 0-5 relevance rating (5 being most relevant) for these five categories:

Oracle Application Express (APEX)

Oracle REST Data Services (ORDS)

Oracle Cloud Infrastructure (OCI)

Oracle SQL (SQL)

Oracle PL/SQL (PLSQL)

A blog post is excluded if the total score for these categories is less than three.

💁

In short, this was painful to do manually, and honestly, I went with my gut feeling most of the time.

The Initial Solution

My initial approach was to write PL/SQL code (about 200 lines) to call the Open AI Chat Completions API for each new post, along with a system prompt telling Open AI how I wanted it to score the post for relevance. This section describes this initial approach.

System Prompt

One of the first things to master when dealing with Generative AI is the art of prompt engineering. The system prompt is passed to the Open AI API to guide Open AI on what you want it to do with the user message. In this scenario, the user message is the Blog Post content.

After some trial and error, this is the system prompt I came up with.

💡

You are an Oracle Technology blog analyzer assistant. Your goal is to determine if the blog post is related to Oracle Technologies. You will receive the content of a blog post and analyze it. Score the blog post on a scale of 0-5, indicating how relevant the post is to this comma-separated list of topics: Oracle Application Express (APEX), Oracle REST Data Services (ORDS), Oracle Cloud Infrastructure (OCI), Oracle SQL (SQL), Oracle PL/SQL (PLSQL). Return a JSON object with each topic and its score. Use the value part of the topic name inside () as the topic JSON field name. A score of 0 should indicate not relevant at all, and 5 indicates extremely relevant.

Code

I built three main APIs: one to build the JSON payload, one to call Open AI, and one to parse the response and score the blog post. This produced good results, and the solution was elegant (IMHO).

Build Open AI API JSON Payload

FUNCTION ai_payload_json (p_model IN VARCHAR2, p_system_msg IN VARCHAR2, p_user_msg IN VARCHAR2) RETURN CLOB IS l_logger_params logger.tab_param; l_payload_obj json_object_t := json_object_t(); l_format_obj json_object_t := json_object_t(); l_message_obj json_object_t := json_object_t(); lt_messages json_array_t := json_array_t (); BEGIN l_format_obj.put('type', 'json_object'); l_payload_obj.put('model', p_model); l_payload_obj.put('response_format', l_format_obj); l_message_obj.put ('role', 'system'); l_message_obj.put ('content', p_system_msg); lt_messages.append (l_message_obj); l_message_obj.put ('role', 'user'); l_message_obj.put ('content', p_user_msg); lt_messages.append (l_message_obj); l_payload_obj.put('messages', lt_messages); RETURN (l_payload_obj.to_clob()); END ai_payload_json;

Sample JSON Payload sent to Open AI:

{ "model": "gpt-4o", "response_format": { "type": "json_object" }, "messages": [ { "role": "system", "content": "You are a blog analyzer assistant..." }, { "role": "user", "content": "Blog Post Content Goes Here" } ] }

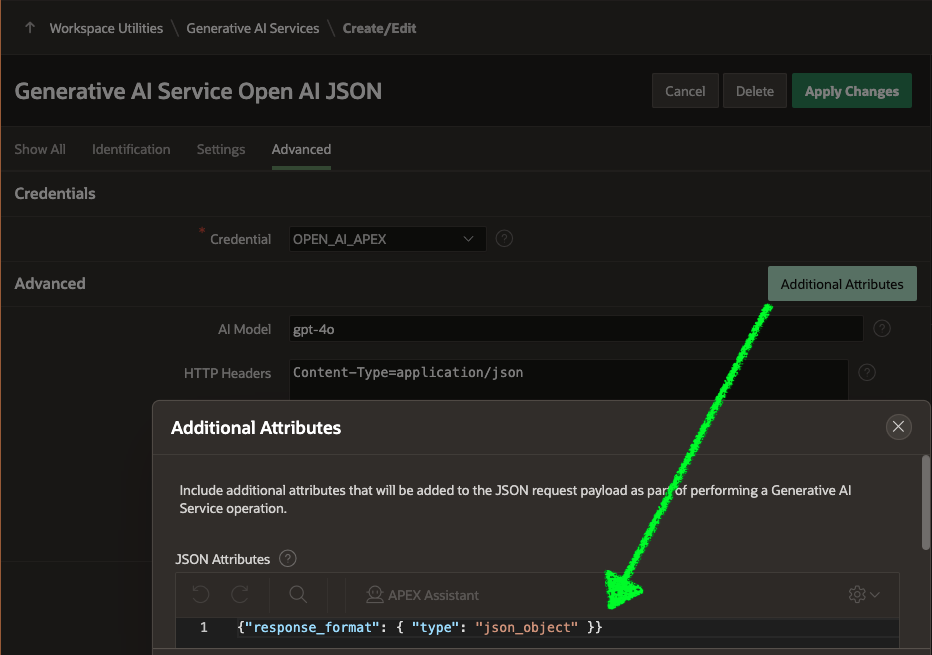

- Important: Setting

response_format.type to json_object ensures we get a JSON Response from Open AI. In addition to setting the value, you must use the word JSON somewhere in your system prompt.

Call Open AI API

PROCEDURE call_open_ai (p_json_payload IN CLOB, x_response OUT CLOB) IS BEGIN apex_web_service.set_request_headers (p_name_01 => 'Content-Type', p_value_01 => 'application/json', p_reset => true); x_response := apex_web_service.make_rest_request (p_url => 'https://api.openai.com/v1/chat/completions', p_http_method => 'POST', p_body => p_json_payload, p_transfer_timeout => 30, p_credential_static_id => 'OPEN_AI'); END call_open_ai;

Sample Open AI Response:

{ "id": "chatcmpl-9e7zWon0C2rwGAJf1YbFQSqq1B2gN", "object": "chat.completion", "created": 1719350866, "model": "gpt-4o-2024-05-13", "choices": [ { "index": 0, "message": { "role": "assistant", "content": "{\n \"APEX\": 5,\n \"ORDS\": 0,\n \"OCI\": 0,\n \"SQL\": 1,\n \"PLSQL\": 0\n}" }, "logprobs": null, "finish_reason": "stop" } ], "usage": { "prompt_tokens": 1631, "completion_tokens": 40, "total_tokens": 1671 }, "system_fingerprint": "fp_efc58689b0" }

- The JSON containing the scores is contained in the

message.content field.

{"APEX": 5, "ORDS": 0, "OCI": 0, "SQL": 1, "PLSQL": 0}

Parse Response and Score Blog Post

PROCEDURE categorize_post (p_plain_content IN cnba_blog_posts.plain_content%TYPE, x_total_score OUT NUMBER) IS CURSOR cr_parse_response (cp_response IN CLOB) IS SELECT * FROM JSON_TABLE(cp_response, '$.choices[*]' COLUMNS (choice_index NUMBER PATH '$.index', message_role VARCHAR2(4000) PATH '$.message.role', message_content VARCHAR2(4000) PATH '$.message.content', logprobs VARCHAR2(4000) PATH '$.logprobs', finish_reason VARCHAR2(4000) PATH '$.finish_reason' ) ); CURSOR cr_scores (cp_scores_json IN VARCHAR2) IS SELECT apex_score , ords_score , oci_score , sql_score , plsql_score , apex_score + ords_score + oci_score + sql_score + plsql_score total_score FROM JSON_TABLE(cp_scores_json, '$' COLUMNS (apex_score NUMBER PATH '$.APEX', ords_score NUMBER PATH '$.ORDS', oci_score NUMBER PATH '$.OCI', sql_score NUMBER PATH '$.SQL', plsql_score NUMBER PATH '$.PLSQL' ) ); l_response_json CLOB; l_payload_clob CLOB; l_system_message cnai_prompts.prompt_value%TYPE; lr_parse_response cr_parse_response%ROWTYPE; lr_scores cr_scores%ROWTYPE; BEGIN x_total_score := 0; l_system_message := 'You are an Oracle Technology blog analyzer assistant...'; l_payload_clob := cn_ai_utl_pk.ai_payload_json (p_model => 'gpt-4o', p_system_msg => l_system_message, p_user_msg => p_plain_content); cn_ai_utl_pk.call_open_ai (p_json_payload => l_payload_clob, x_response => l_response_json); OPEN cr_parse_response (cp_response => l_response_json); FETCH cr_parse_response INTO lr_parse_response; CLOSE cr_parse_response; OPEN cr_scores (cp_scores_json => lr_parse_response.message_content); FETCH cr_scores INTO lr_scores; CLOSE cr_scores; x_total_score := lr_scores.total_score; END categorize_post;

Enter APEX_AI

While the above solution worked well, the APEX_AI API reduced custom code by two-thirds, making it much easier to maintain my code and uptake new features in the future. This section will focus on the current solution, which uses the APEX_AI API.

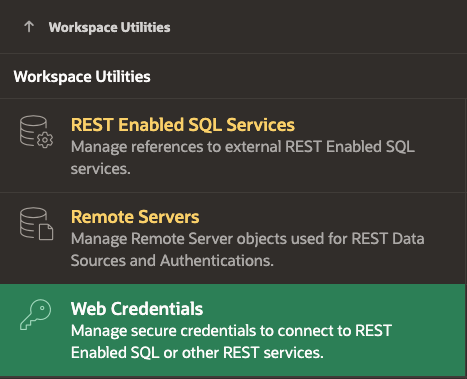

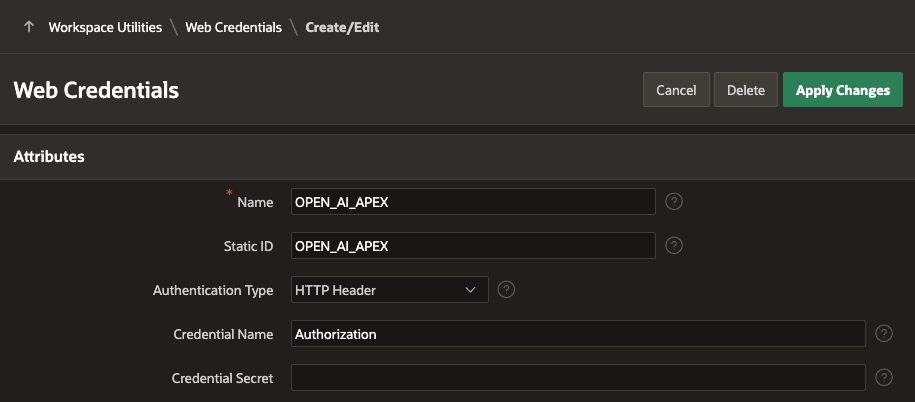

Create an APEX Credential

We need an APEX Credential to store the Open AI Credentials. Navigate to Workspace Utilities > Web Credentials:

Credential Details:

I am using Open AI. I won't go into how to create an API key for Open AI; suffice it to say, it is pretty straightforward. Here is a link to the Open AI Quick Start Tutorial for APIs.

The Credential Secret should be entered as 'Bearer <Open AI Key>'. e.g. Bearer rt-apex-WgqYOhbU0SyoFDDv3weioi4FJMkf289WlxMtMnWqwr0v9

- Don't forget to add the space after the word Bearer and before the token.

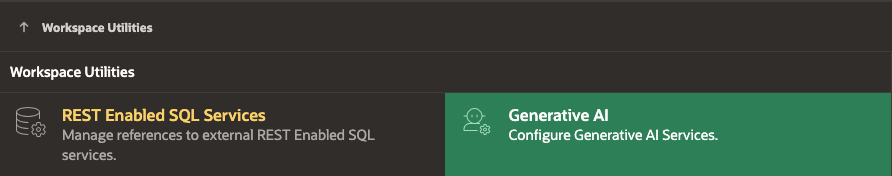

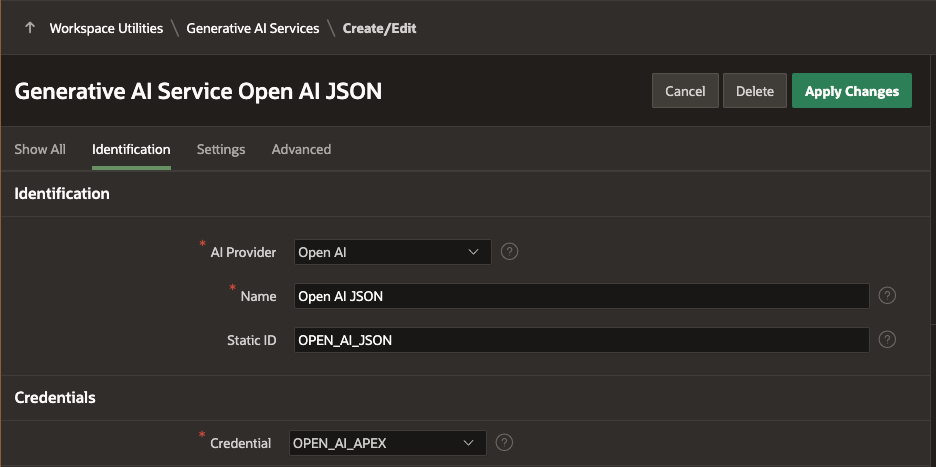

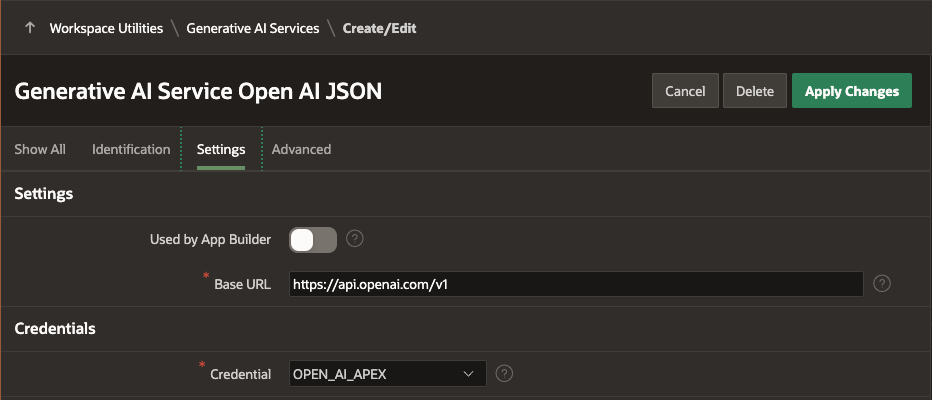

With the Open AI Credentials in place, we can create the APEX Generative AI Service definition. Navigate to Workspace Utilities > Generative AI:

Select the AI Provider , enter a Name and Static ID.

I have named this service 'Open AI JSON' because this service will only work for generating JSON responses (as we will see shortly).

Used by App Builder

- Important: We are asking Open AI only to return a JSON response, so we cannot use this Generative AI Service in the APEX App Builder.

Credential

- Select the credential you created in the previous step.

Update the Code

Now that we have an AI Service configured, we can use the APEX_AI PL/SQL API to do most of the heavy lifting. The final categorization procedure now looks something like this:

PROCEDURE categorize_post (p_plain_content IN cnba_blog_posts.plain_content%TYPE, x_total_score OUT NUMBER) IS CURSOR cr_scores (cp_scores_json IN VARCHAR2) IS SELECT apex_score + ords_score + oci_score + sql_score + plsql_score total_score FROM JSON_TABLE(cp_scores_json, '$' COLUMNS (apex_score NUMBER PATH '$.APEX', ords_score NUMBER PATH '$.ORDS', oci_score NUMBER PATH '$.OCI', sql_score NUMBER PATH '$.SQL', plsql_score NUMBER PATH '$.PLSQL' ) ); l_response_json CLOB; l_system_message VARCHAR2(32000); lr_scores cr_scores%ROWTYPE; l_messages apex_ai.t_chat_messages := apex_ai.c_chat_messages; BEGIN x_total_score := 0; l_system_message := 'You are an Oracle Technology blog analyzer assistant...'; l_response_json := apex_ai.chat (p_prompt => p_plain_content, p_system_prompt => l_system_message, p_service_static_id => 'OPEN_AI_JSON', p_messages => l_messages); OPEN cr_scores (cp_scores_json => l_response_json); FETCH cr_scores INTO lr_scores; CLOSE cr_scores; x_total_score := lr_scores.total_score; END categorize_post;

👉

This represents a dramatic reduction in the code I need to develop and maintain to perform pretty advanced analysis on blog posts.

Open AI Assistant Dynamic Action

The APEX_AI PL/SQL API is not the only AI feature that can be used to incorporate AI into your APEX Apps. The Open AI Assistant Dynamic Action is also available. It lets you declaratively launch a chat session with an AI from APEX.

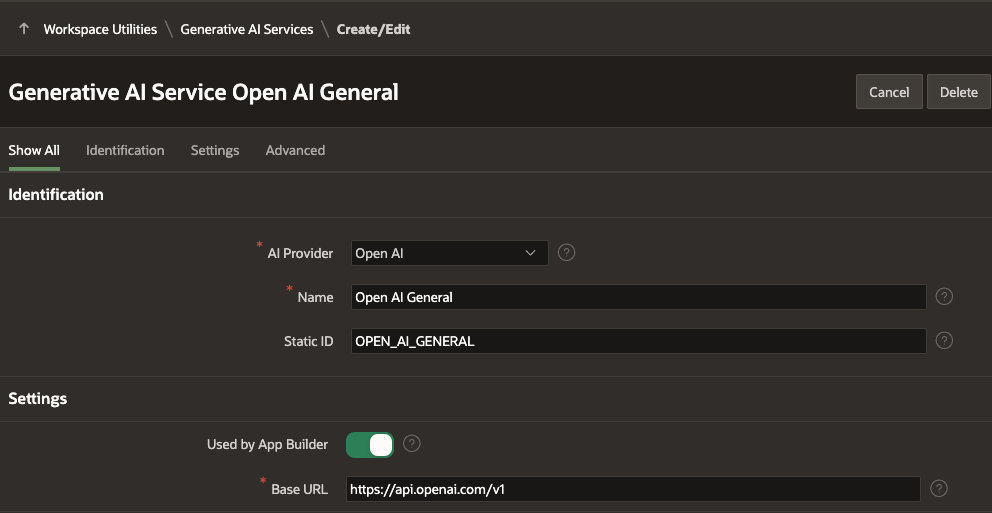

Generative AI Service

This time, we must create an APEX Generative AI Service without the JSON response restrictions.

- I selected

Used by App Builder this time so that I can use this Generative AI service in APEX Application Builder.

Use Case

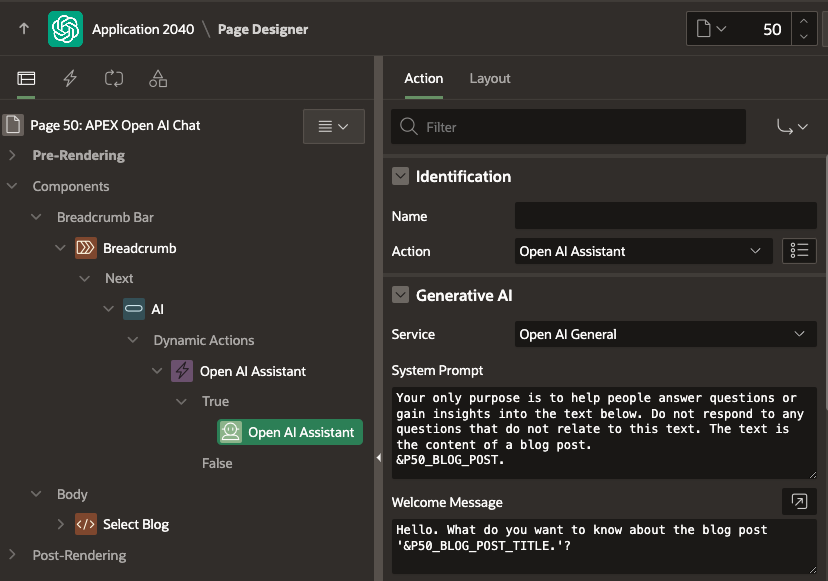

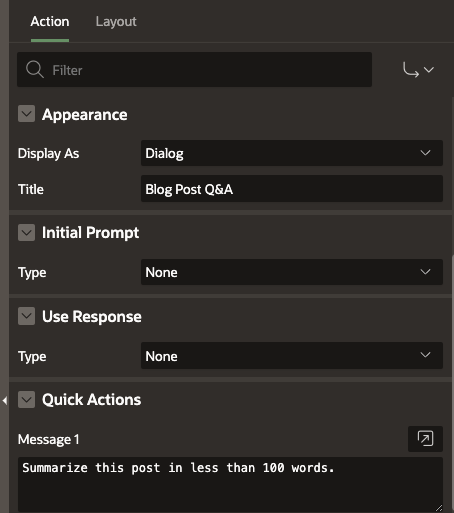

This example will allow users to ask questions about a blog post. I created a button with an On Click Dynamic action which triggers the 'Open AI Assistant' Action:

Service I have selected the 'Open AI General' APEX Generative AI Service defined in the previous section.

System Prompt I have provided some basic instructions to the AI along with the content of the Blog Post from the page item P50_BLOG_POST.

Welcome Message This message will be displayed to the user when the chat session starts. I have included the blog title from the page item P50_BLOG_POST_TITLE.

Display As allows you to show the chat session Inline in a page region or as a popup Dialog.

Title allows you to specify a title for the Dialog.

Use Response allows you to return the chat response to a page item or pass it to some Javascript to handle the response.

Quick Actions allows you to specify a number of pre-defined questions to help the user get started.

Result

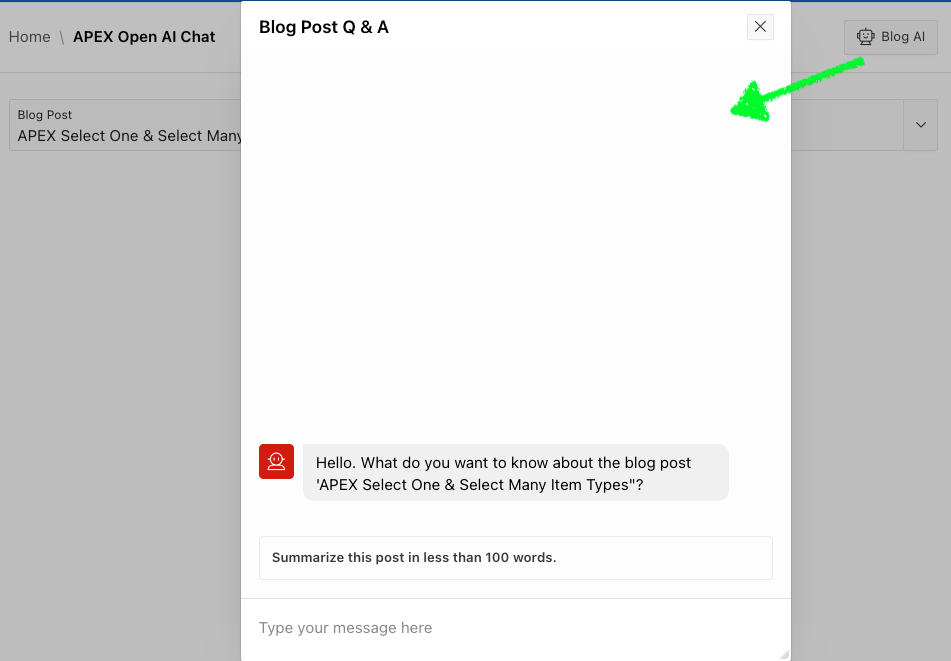

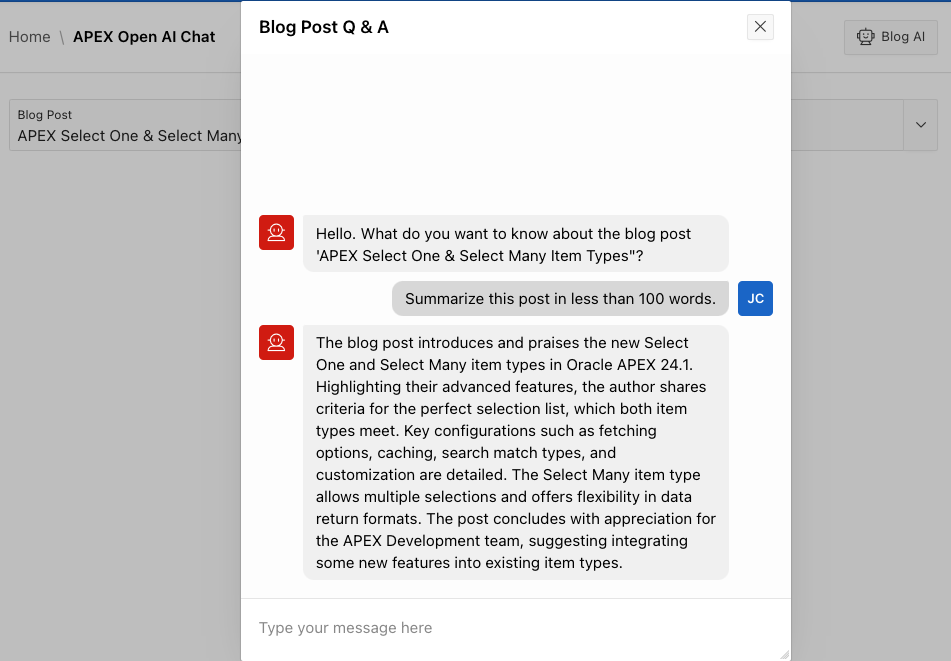

- The user selects a Blog Post and clicks the 'Blog AI' button, which opens a Dialog. The dialog contains the welcome message and a pre-defined question that the user can click to start the conversation.

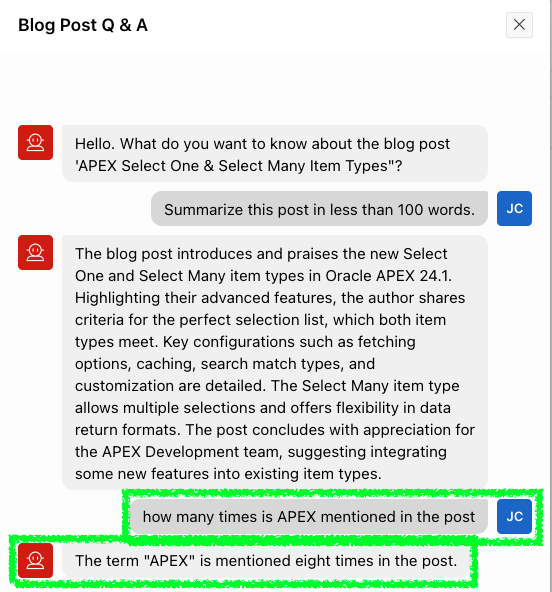

The user can also type their own questions:

😍

That is a lot of functionality for 0 lines of code!

👉

You should know that during the chat conversation, APEX passes the entire chat history to Open AI each time the user submits a question. This includes your context (in the above example, this is the blog post), which can lead to many tokens being consumed. See below for more on tokens.

Tracking Tokens

What is a token? I, of course, asked ChatGPT:

🤖

A token is a unit of text used by OpenAI's language models to encode information. These models break down text into tokens, which can be as small as a character or as large as a word or subword. The tokenization process involves splitting the input text into these units to facilitate processing by the model.

'Prompt Tokens' are consumed based on the text length you pass to the AI API. 'Completion Tokens' are consumed based on the text length the AI API generates for the response. In the AI world, tokens cost money. At the time of writing this post, the Open AI 'gpt-4o' model costs $5.00 for 1M input tokens and $15.00 for 1M input tokens. Open AI API Pricing.

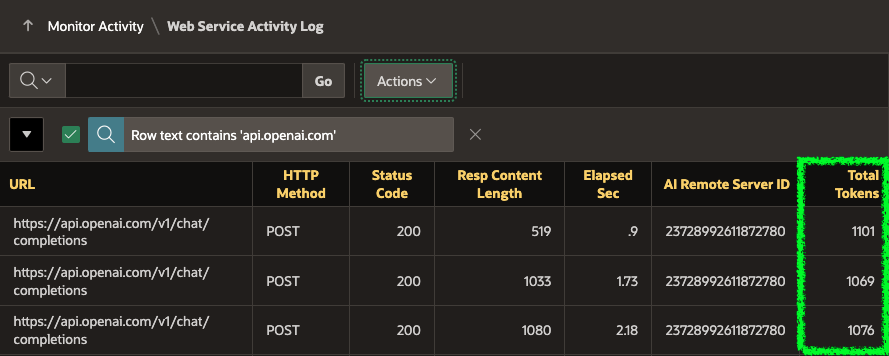

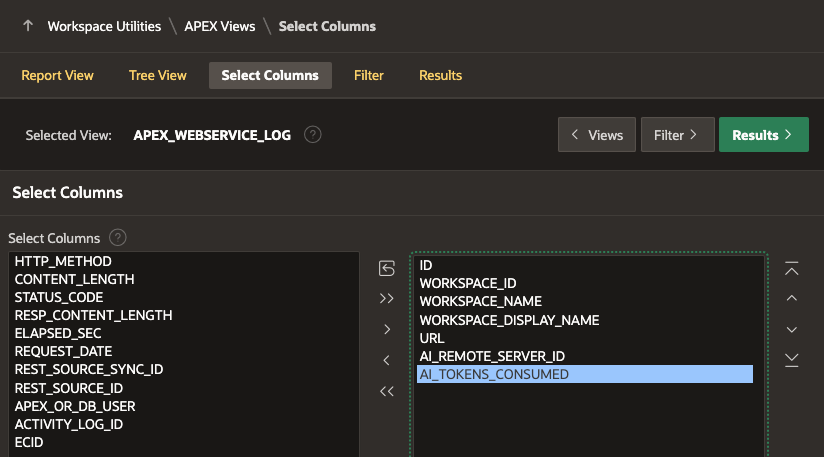

Determining how many tokens are used is essential to understanding your AI costs. APEX has you covered (mostly). Every time the APEX_AI PL/SQL API is called, APEX adds a record to the Web Service Activity log. New in APEX 24.1, this log now includes the total number of tokens used in each request (prompt tokens + completion_tokens).

You can access the Web Service Activity log from APEX Builder:

or from the APEX view:

Other Use Cases

Another use case for the Open AI Assistant Dynamic Action could be providing help to users of an APEX Application via a chat interface.

Another use case for the APEX_AI PL/SQL API is to analyze data from an Interactive Grid. Using Anton Nielsen's plugin get_ig_data, you could get the data from an IG in JSON format and send it to an AI using the APEX_AI PL/SQL API to perform analysis.

💡

For that matter, you could collect any data from your database and send it to an AI for analysis. ⚠️ You should, of course, make sure you are comfortable sharing this data with a third party.

Conclusion

Even though this is a fairly simple use case, I hope this post helps you visualize other use cases where the combination of APEX and AI can bring value to your users.

评论

发表评论